One might be called a “programmer” upon successfully completing a bachelors degree in Computer Science, equipped with a theoretical framework of computer science concepts, some sorting algorithms and data structures, having already written multiple lines of code using multiple OOP programming languages and even some assembly code.

Even though students are introduced to various programming languages the “market” does not automatically qualifies them as capable Junior Software Developers since it is common that every junior job requires some “working experience” and after graduation students are often left “inexperienced” using the languages used in the outside world like .net , Objective C (iOS) , Ruby mostly used in conjunction with Rails.

But does actually using a language as part of a curriculum equips the student with adequate experience?. Well I thing this is Dependant of the student if I had to answer with a yes or no i would have answered “YES!”, but my background is not really related to human resources.

Usually what counts is the amount of projects and material that was accumulated in conjunction with the studies usually completed in parallel in an ideal situation, I wish I could go back in time.

Well lets work towards getting that experience, that self induced experience, the dilemma that occurs is: shall we really use the languages we already learned or should we learn something new?

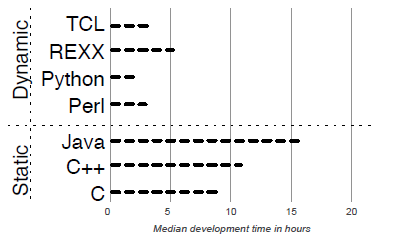

Usually people actually stick to what they already know and are fast to drop anything dynamic like ruby and python basing their reasoning on performance, argue and debate mumbling all sorts of valid “mambo jumbo”, but what’s really of most importance, productivity or performance?